Burton is a history professor and director of the Humanities Research Institute at the University of Illinois, Urbana-Champaign. She is a public voices fellow with The OpEd Project.

When the history of Claudine Gay’s six-month tenure as Harvard’s president is written, there will be a lot of copy devoted to the short time between her appearance before Congress and her resignation from the highest office at one of the most prestigious and powerful institutions of higher education.

Two narratives will likely dominate.

One will be the highly orchestrated campaign – outlined in clinical, triumphant detail by conservative activist Chris Rufo – by the right to mobilize its highly coordinated media and communications machine to stalk Gay and link her resignation to accusations of plagiarism.

The other will be the response of liberal pundits and academics who saw in Gay’s fall a familiar pattern of pitting diversity against both excellence and merit, especially in the case of Black women whose successes must mean they have to be put back in their place.

Historians will read those two narratives as emblematic of the polarization of the 2020s, and of the way the political culture wars played out on the battleground of higher education.

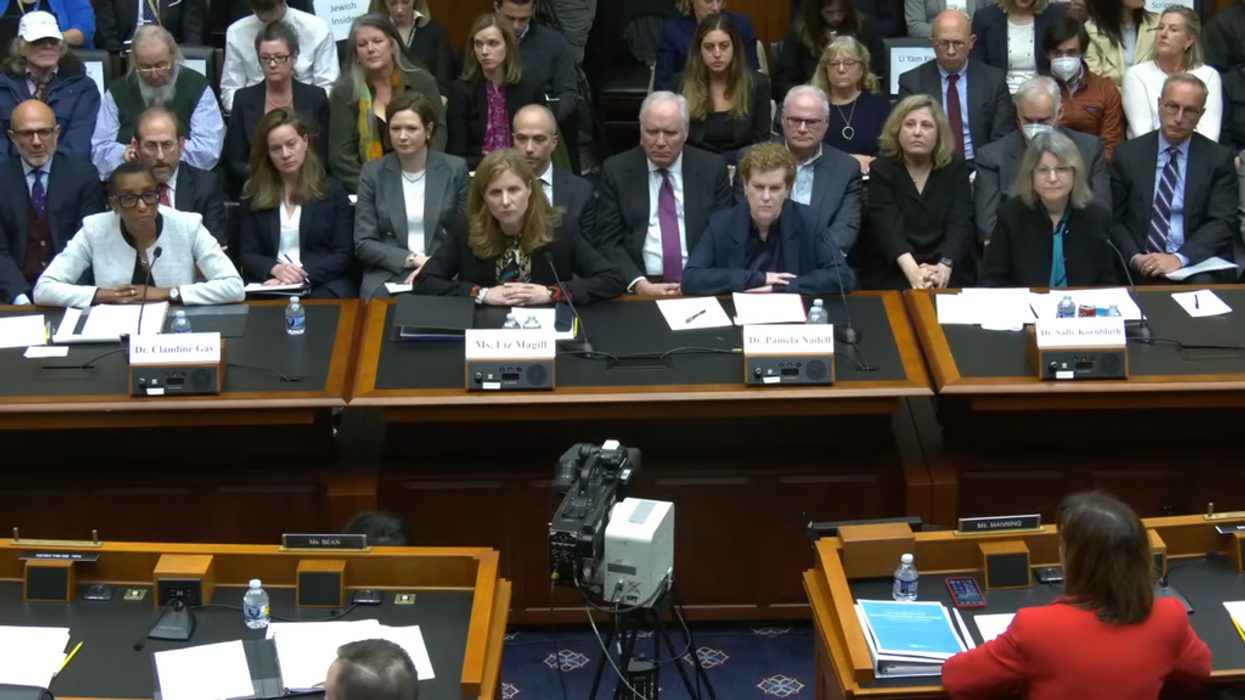

There must, of course, be a reckoning with the role that the Oct. 7, 2023, attack by Hamas on Israel and the killing of tens of thousands of Palestinians in the war on Gaza played in bringing Gay to book. And the congressional hearings will be called what they were: a show trial carried on with the kind of vengeance characteristic of mid-20th century totalitarian regimes.

Who knows, there may even be an epilogue that tracks the relationship of Gay’s downfall to the results of the 2024 presidential election.

But because the archive available to write this history is not limited to the war of words on the right and the left, the story they tell will hang on the most stunning, and underplayed, takeaway of all.

And no, it’s not that Melania Trump plagiarized from Michelle Obama’s speech.

It’s the fact that in the middle of a news cycle in which the media could not stop talking about the rise of ChatGPT, with its potential for deep fakery and misinformation and plagiarism of the highest order, what felled Harvard’s first Black woman president were allegations of failing to properly attribute quotes in the corpus of her published research.

Yes. In an age when the combination of muted panic and principled critique of ChatGPT across all levels the U.S. education system meets with the kind of scorn — or patronizing reassurance — that only a multibillion dollar industry hellbent on financializing artificial intelligence beyond anything seen in the history of capitalism could mobilize, what brought a university president to her knees were accusations that she relied too heavily on the words of others, such that the “truth” of her work was in question.

Falsifying everything from election claims to the validity of disinfectant as a cure for Covid-19 is standard fare on the far right. The irony is that those on the right went to the bank on the assumption that the biggest disgrace a Harvard professor could face is an accusation of plagiarism.

Chroniclers of this moment will not fail to note the irony that we were living in the surround-sound of ChatGPT, which will surely go down as the biggest cheating engine in history. Students are using it to do everything from correcting their grammar to outright cutting and pasting text generated by AI and calling it their own. There is a genuine crisis in higher education around the ethics of these practices and about what plagiarism means now.

There’s no defense of plagiarism regardless of who practices it. And if, as the New York Post reports, Harvard tried to suppress its own failed investigation of Gay’s research, that’s a serious breach of ethics.

Meanwhile historians, who look beyond the immediacy of an event in order to understand its wider significance, will call attention to the elephant in the room in 2024: the potentially dangerous impact of AI, ChatGPT and others like it on our democracy. While AI can assist in investigative reporting, it can also be abused to mislead voters, impersonate candidates and undermine trust in elections. This is the wider significance of the Gay investigation.

And worse: It got praise for its ability to self-correct — to mask the inauthenticity of its words more and more successfully — in every story that covers the wonder, and the inevitability, of AI.

There’s no collusion here. But it’s a mighty perverse coincidence hiding in plain sight.

So when the history of our time is written, be sure to look for the story of how AI’s capacity for monetizing plagiarism ramped up as Claudine Gay’s career imploded. It will be in a chapter called “Theater of the Absurd.”

Unless, of course, it’s written out of the history books by ChatGPT itself.