The Anti-Defamation League has a long history of countering hate, bias, and harmful misinformation, which is to say the organization has some strong opinions about what happens on social media. Continuing our exploration of digital disinformation and free speech, Civic Genius chatted with Lauren Krapf, Technology Policy & Advocacy Counsel at the Anti-Defamation League, about how the ADL thinks tech companies can do better and what misinformation looks like in the real world – both online and off.

Podcast: Misinformation, hate and accountability

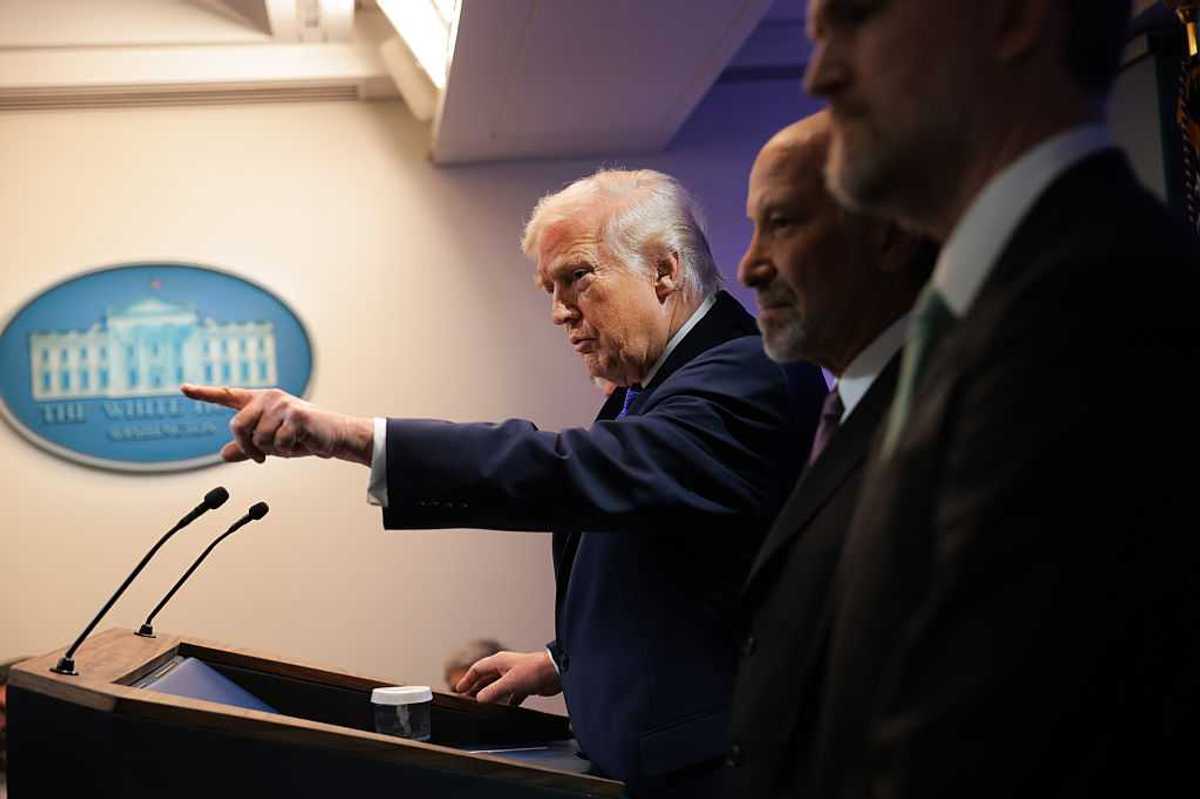

Trump & Hegseth gave Mark Kelly a huge 2028 gift