Artificial intelligence (AI) has been heralded as a technological revolution that will transform our world. From curing diseases to automating dangerous jobs to discovering new inventions, the possibilities are tantalizing. We’re told that AI could bring unprecedented good—if only we continue to invest in its development and allow labs to seize precious, finite natural resources.

Yet, despite these grand promises, most Americans haven’t experienced any meaningful benefits from AI. It’s yet to meaningfully address most health issues, and for many, It’s not significantly improving our everyday lives, excluding drafting emails and making bad memes. In fact, AI usage is still largely confined to a narrow segment of the population: highly educated professionals in tech hubs and urban centers. An August 2024 survey by the Federal Reserve and Harvard Kennedy School found that while 39.4% of U.S. adults aged 18-64 reported using generative AI, adoption rates vary significantly. Workers with a bachelor's degree or higher are twice as likely to use AI at work compared to those without a college degree (40% vs. 20%), and usage is highest in computer/mathematical occupations (49.6%) and management roles (49.0%).

For the majority of Americans, especially those in personal services (12.5% adoption) and blue-collar occupations (22.1% adoption), AI remains an abstraction, something that exists in the future rather than their present.

While the rewards of AI are still speculative, the costs are becoming increasingly tangible. And the people paying those costs are not the ones benefiting from AI today. In fact, much of the burden of AI’s development is falling squarely on the shoulders of the American West—both its people and its land. According to recent research, data centers in the United States are consuming an increasing share of the country's total electricity. These facilities, which are crucial for AI deployment, used about 3% of all U.S. electricity in 2022. By 2030, their share is estimated to grow to 9% of total U.S. electricity consumption.

This surge in energy demand is particularly significant for the Western United States, with its concentration of tech hubs and data centers. Moreover, the carbon dioxide emissions from data centers may more than double between 2022 and 2030, further intensifying the environmental impact on these regions.

Here’s why: developing and deploying AI requires enormous amounts of energy. Advanced machine learning models demand computing power on a scale that most people can barely comprehend. Recent International Energy Agency projections highlight the magnitude of this demand: global electricity consumption from data centers, cryptocurrencies, and AI is expected to reach between 620-1050 trillion watt hours (TWh) by 2026. To put that in perspective, 1,000 TWh could provide electricity to about 94.3 million American homes for an entire year.

All that energy has to come from somewhere. Increasingly, it’s coming from the West —the part of the country that has long been tapped to fuel the nation’s ambitions, from oil and gas to solar, wind, and hydropower.

This energy extraction is putting immense pressure on the West’s already strained resources. Land is being consumed, water is being diverted, and communities are being disrupted, all to keep the lights on in tech labs far removed from the realities of life on the ground. The irony is that the very regions making AI possible are the least likely to benefit from it.

The rush to ramp up energy production for AI feels eerily familiar. We’ve seen these “get rich quick” schemes before—industries that swoop into rural areas, extract valuable resources, and leave environmental and social destruction in their wake. The West has been exploited before by out-of-state interests with big promises and shallow commitments, and AI risks becoming the latest chapter in that story.

We need to have an honest conversation about the true costs of AI development—particularly when it comes to energy consumption. AI labs may talk about curing diseases and inventing new technologies, but until those breakthroughs become reality, the rest of us—especially those in the West—are left footing the bill. And right now, that bill is being paid in the form of depleted resources and communities that are being squeezed for the sake of a future that remains distant and uncertain.

The truth is, we can’t continue to deplete our resources in the hope that AI’s promises will eventually materialize. We must demand accountability and transparency from those developing AI. Where is the energy coming from? Who is being impacted? And most importantly, who will benefit?

AI’s future may hold incredible potential, but we must make sure that we’re not sacrificing the West’s present for a future that may never arrive. If AI is going to reshape our world, it must do so in a way that lifts up all Americans, not just a select few. Until then, we need to be clear-eyed about the costs—and demand better.

Frazier is an adjunct professor of Delaware Law and an affiliated scholar of emerging technology and constitutional law at St. Thomas University College of Law.

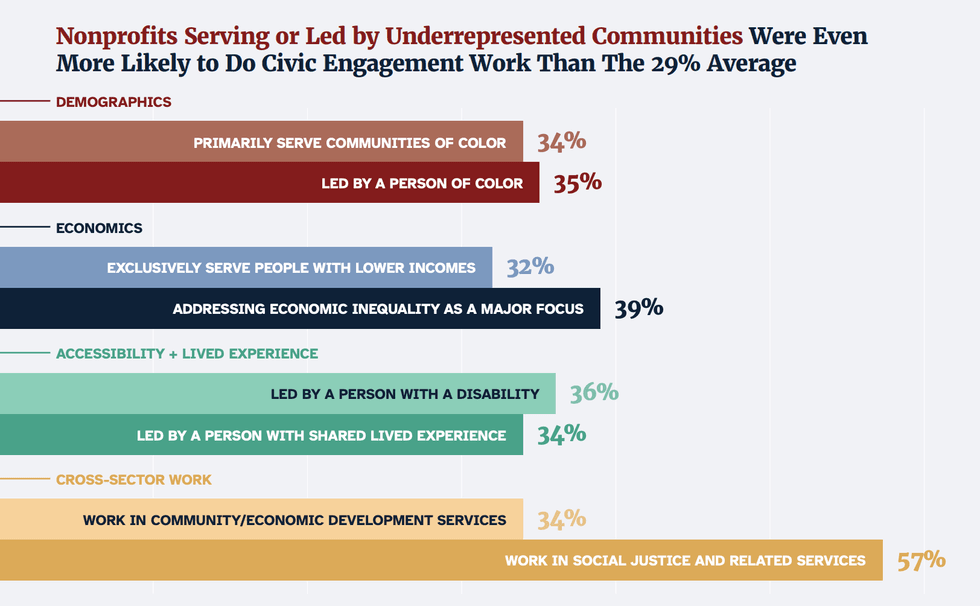

"On the Frontlines of Democracy" by Nonprofit Vote,

"On the Frontlines of Democracy" by Nonprofit Vote,

Trump & Hegseth gave Mark Kelly a huge 2028 gift