We’re all familiar with the motivating cry of “YOLO” right before you do something on the edge of stupidity and exhilaration.

We’ve all seen the “TL;DR” section that shares the key takeaways from a long article.

And, we’ve all experienced “FOMO” when our friends make plans and we feel compelled to tag along just to make sure we’re not left on the sidelines of an epic experience.

Let’s give a name to our age’s most haunting anxiety: TFWM—The Future We’ll Miss. It’s the recognition that future generations may ask why, when faced with tools to cure, create, and connect, we chose to maintain the status quo. Let’s run through a few examples to make this a little clearer:

- AI can detect breast cancer earlier than humans and save millions in treatments and perhaps even thousands of lives. Yet, AI use in medical contexts is often tied up in red tape. #TFWM

- New understanding of the interior design of cells via AI tools has the potential to increase drug development. AI researchers are still struggling to find the computing necessary to run their experiments. #TFWM

- Weather forecasts empowered by AI may soon allow us to detect storms ten days earlier. A shortage of access to quality data may delay improvements and adoption of these tools. #TFWM

- Firefighters have turned to VR exercises to gain valuable experience fighting fires in novel, extreme context. It’s the sort of practice that can make a big difference when the next spark appears. Limited AI readiness among local and state governments, however, stands in the way. #TFWM

I could go on (and I will in future posts). The point is that in several domains, we’re making the affirmative choice to extend the status quo despite viable alternatives to further human flourishing. Barriers to spreading these AI tools across jurisdictions are eminently solvable. Whether it’s budgetary constraints, regulatory hurdles, or public skepticism, all of these hindrances can be removed with enough political will.

So, why am I trying to make #TFWM a “thing"? In other words, why is it important to increase awareness of this perspective? The AI debate is being framed by questions that have distracted us from the practical policy challenges we need to address to bring about a better future.

The first set of distracting questions is some variant of: "Will AI become a sentient overlord and end humanity?" This is a debate about a speculative, distant future that conveniently distracts us from the very real, immediate lives we could be saving today.

The second set of questions is along the lines of “How many jobs will AI destroy?” This is a valid, but defensive and incomplete, question. It frames innovation as a zero-sum threat rather than asking the more productive question: “How can we deploy these tools to make our work more meaningful, creative, and valuable?”

Finally, there’s a tranche of questions related to some of the technical aspects of AI, like “Can we even trust what it says?” This concern over AI "hallucinations," while a real technical challenge, is often used to dismiss the technology's proven, superhuman accuracy in specific, life-saving domains, such as in medical settings.

A common thread ties these inquiries together. These questions are passive. They ask, “What will AI do to us?”

TFWM flips the script. It demands we ask the active and urgent question: “What will we fail to do with AI?”

The real risk isn't just that AI might go wrong. The real, measurable risk is that we won't let it go right. The tragedy is not a robot uprising that makes for good sci-fi but bad public policy; it's the preventable cancer, the missed storm warning, the failed drug trial. The problem isn't the technology; it's our failure of political will and, more pointedly, our failure of legal and regulatory imagination.

This brings us to why TFWM needs to be a "thing."

FOMO, for all its triviality, is a powerful motivator. It’s a personal anxiety that causes action. It gets you off the couch, into the Lyft, and into the party.

TFWM must become our new civic anxiety. It’s not the fear of missing a party; it's the fear of being judged by posterity. It is the deep, haunting dread that our grandchildren will look back at this moment of historic opportunity and ask us, “You had the tools to solve this. Why didn't you?”

This perspective creates the political will we desperately need. It reframes our entire approach to governance. It shifts the burden of proof from innovators to the status quo. The question is no longer, "Can you prove this new tool is 100% perfect and carries zero risk?" The question becomes, "Can you prove that our current system—with all its human error, bias, cost, and delay—is better than the alternative?"

YOLO, FOMO, and TL;DR are shorthand for navigating our personal lives. TFWM is the shorthand for our collective responsibility. The status quo is not a safe, neutral position. It is an active choice, and it has a body count. The future we'll miss isn't inevitable. It's a decision. And right now, we are deciding to miss it every single day we fail to act.

Kevin Frazier is an AI Innovation and Law Fellow at Texas Law and author of the Appleseed AI substack.

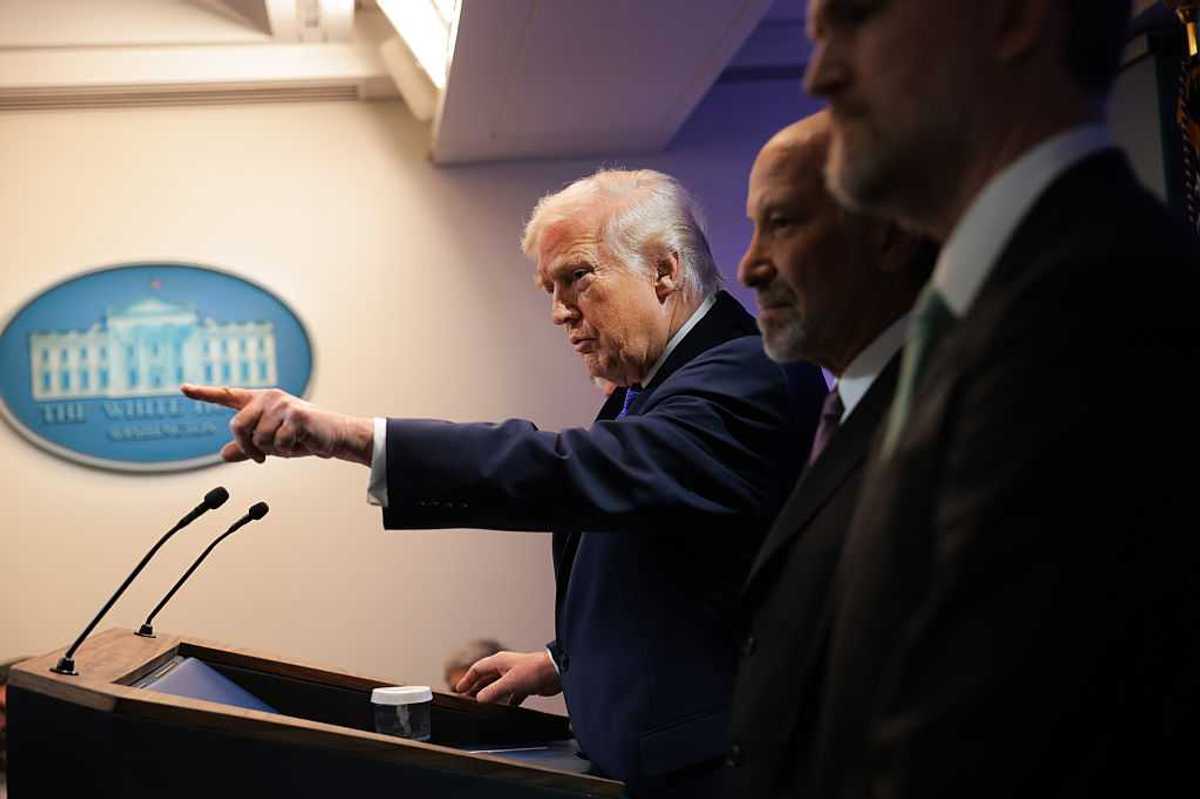

Trump & Hegseth gave Mark Kelly a huge 2028 gift