In medicine, rare moments arise when technological breakthroughs and shifts in leadership create an opportunity for sweeping change. The United States now stands at that crossroad.

A major advance in artificial intelligence, combined with a shake-up at the highest levels of federal healthcare leadership, has the potential to save hundreds of thousands of lives, make medical care affordable and ease the burnout crisis among doctors and nurses.

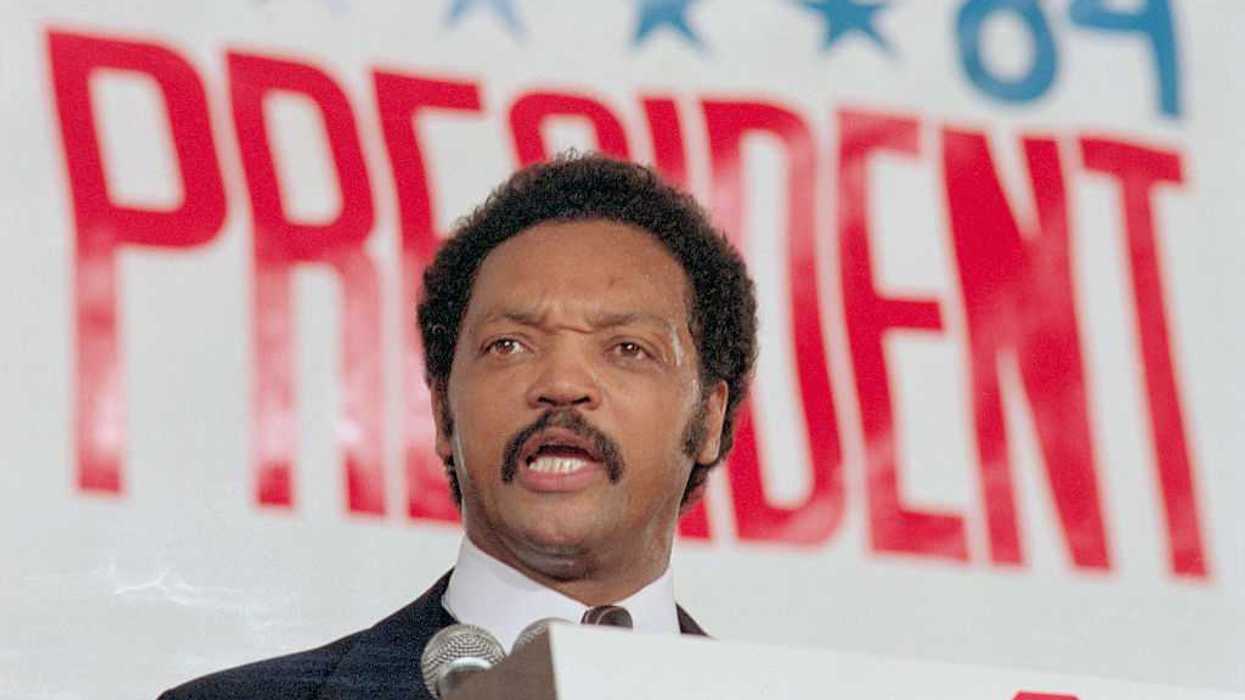

But there’s a risk this potential will go unrealized. The newly appointed Secretary of Health and Human Services (HHS) Robert F. Kennedy Jr. and Dr. Marty Makary, the incoming head of the Food and Drug Administration (FDA), must move swiftly to capitalize on what U.S. Vice President J.D. Vance recently called, “One of the most promising technologies we have seen in generations.”

A breakthrough in AI development

For the first time, generative AI isn’t solely the domain of billion-dollar companies. Instead, entrepreneurial and midsize companies can build tools for patients without having to raise massive amounts of capital.

A new generative AI model, DeepSeek-V3, recently emerged from China, and unlike models built by OpenAI, Google or Anthropic, it wasn’t developed with billions of dollars in funding. Reportedly developed for less than $6 million, DeepSeek used a technique called “knowledge distillation” that allows GenAI applications to learn from existing models faster, cheaper and with greater efficiency.

While DeepSeek is free to use for Americans, there are serious concerns about data retention policies and privacy issues for data stored on servers in China. However, the biggest advance won’t derive from the use of DeepSeek in America but from the rapid advancement of American Open-source AI packages. This means any company, researcher or startup will soon be able to access and refine it to build tools for patients. If RFK Jr. and Makary act quickly, they can unlock AI’s full potential before red tape strangles it.

But where will innovative companies find the biggest opportunity to save lives and make medical care affordable?

America is currently mired in an urgent and worsening crisis of chronic disease, which affects 60% of Americans and drives 70% of healthcare costs. Right now, most chronic diseases are poorly managed. Hypertension, diabetes, and heart failure remain uncontrolled in at least 40% of U.S. cases, leading to millions of avoidable strokes, heart attacks, kidney failures and cancers each year. According to CDC estimates, effective control of these conditions would prevent 30–50% of these life-threatening events.

The future is here and now

Rather than spending hundreds of millions to build large language models from scratch, healthcare startups will be able to create their own generative AI tools at a fraction of the cost. But unlike today’s broad AI applications, which answer general medical questions, this next generation of generative AI will be different. It will be hyper-specialized, trained on massive amounts of existing (but largely unused) patient data to monitor and manage these chronic diseases.

Right now, 97% of hospital bedside monitor data is discarded, never analyzed to improve patient care. Similarly, today’s GenAI models have never been trained on millions of hours of recorded medical call center conversations and chronic disease management check-ins that provide medical advice and offer personalized care recommendations.

Here’s how it could work: For a newly diagnosed patient with diabetes or hypertension, GenAI-enabled wearable monitors would continuously track blood sugar or blood pressure, analyzing fluctuations in real time. And for patients, instead of waiting four months for a routine follow-up visit, the new AI system would identify poor chronic disease control months earlier, provide timely medical advice and flag issues for clinicians when medication adjustments are needed—all for an estimated cost of less than $9 per hour.

For heart failure patients, GenAI-driven monitoring tools would assess daily clinical status, detecting subtle signs of deterioration before a full-blown crisis occurs. Instead of being rushed to the hospital two days later when they can’t breathe, patients and their doctors would receive early alerts, allowing for immediate intervention and eliminating the need for hospitalization.

These disease-specific GenAI agents won’t replace doctors. They’ll fill the gaps between office visits, identify patients at risk, and provide continuous and data-driven care, lowering costs and decreasing daily demands on clinicians.

The FDA must modernize its approach to AI in medicine

Despite AI’s potential to save lives and lower healthcare costs, outdated FDA regulations threaten to stall these innovations before they can reach patients.

The agency has long treated AI like a traditional drug or medical device, demanding information on the data sources and expecting years-long clinical trials. This isn’t how GenAI operates. Unlike pharmaceuticals that keep the same chemical structure, GenAI systems continuously learn and improve—a process driven by the application itself.

RFK Jr. and Makary have a rare opportunity to fix the burdensome regulatory process and lower the barriers to implementation. While Kennedy’s stance on vaccines has drawn criticism, his stated commitment to public health and tackling chronic disease aligns with what GenAI can achieve. Meanwhile, Makary has built a reputation for patient safety and challenging outdated medical policies. He is likely to recognize the value GenAI provides for patients.

A new AI approval framework

Rather than forcing GenAI-driven disease management programs to fit into an antiquated approval model, HHS and the FDA should encourage the development of these programs and fast-track implementation by:

- Prioritizing GenAI applications that focus on diabetes, hypertension, heart failure and similarly high-impact chronic diseases.

- Comparing GenAI-driven programs to existing clinician-led models rather than some hypothetically perfect model. When GenAI-powered disease management tools can outperform humans by at least 10% in advice quality, successful disease control and patient satisfaction, they should be given FDA approval.

As U.S. life expectancy remains stagnant, and over half the population can’t afford medical care, the window for action is narrowing. RFK Jr. and Makary must act now to modernize the approach the HHS and FDA take to GenAI. If not, bureaucratic inertia will lock American medicine in the past.

Dr. Robert Pearl is a Stanford University professor, Forbes contributor, bestselling author, and former CEO of The Permanente Medical Group.