Kevin Frazier is an Assistant Professor at the Crump College of Law at St. Thomas University. He previously clerked for the Montana Supreme Court.

New AI tools, like ChatGPT, threaten that horrible, wonderful process of trying to find the right words. Even as I typed that sentence, words suggested by my phone danced above the keyboard—passively steering me but directing me nonetheless.

These simple tools save time, right? And, they assuredly reduce typos, correct? Maybe they even help us communicate with one another by increasing the odds of everyone using similar phrases, that’s a good thing?

Soon AI tools will offer to replace our critical thinking in other contexts too. Need to decide who to vote for? In the near future you may engage with AI chatbots trained to emulate political candidates -- rather than go door to door, these candidates will develop and release bots that aim to persuade you to vote a certain way. Who needs the Iowa State Fair to evaluate a candidate in person when you can just ask “the candidate” any question you want by “talking” with their bot?

AI tools also shape what news we read and social media comments we see--in fact, they have done so for several years. And, in some cases, AI tools have taken over the “boring” parts of our jobs. Some lawyers, for instance, have turned to ChatGPT to conduct legal research and review documents.

Are these gains in convenience worth the loss? No. In fact, it’s the sort of deal that the playground bully would offer - trading you the basketball with a leak for your spot on the best swing.

The lesson is that convenience always comes at a cost.

So what are we unwilling to give up for a little more convenience? If we don’t identify the skills, tasks, and activities that are fundamental to being human, then there’s a chance that AI will not only address those core parts of being human but actually reduce our ability and willingness to do the very things that distinguish and define us. Folks in the AI safety space call this “enfeeblement” -- I prefer to think of it as a loss of our humanity.

Our willingness to embrace the added seconds or minutes or, god forbid, hours to do something without the aid of ChatGPT and other AI tools may soon fade. After all, tools of convenience have ruthlessly killed other things--like the joy of sending and receiving a handwritten letter.

So to protect our humanity we have to proactively declare what we regard as fundamentally human endeavors and fend off the urge to outsource those endeavors to tools of convenience.

This humble (and short) column will not try to list those endeavors. My hope is instead to start a conversation about the spaces we want to remain AI free--or at least to the fullest extent possible. Given the significance of the upcoming 2024 election, I think starting that conversation on the use of AI tools in democratic activities makes a lot of sense.

Should, for example, candidates be able to use AI chatbots to impersonate them? If so, should they have to provide a disclaimer that the bot is, in fact, not the candidate? May political parties release ads informed by AI tools to appeal specifically to you based on the mountains of data it has compiled about you?

I know my answers to these questions, but I want to know yours. We need to debate what makes us…well…us, if we are going to have any chance of developing norms, regulations, and laws that shield fundamental human endeavors from the dangers of convenience. What would you declare "AI Exclusionary Zones" and why? Such zones may seem like an odd thing to discuss but if we don't shield it, convenience will conquer.

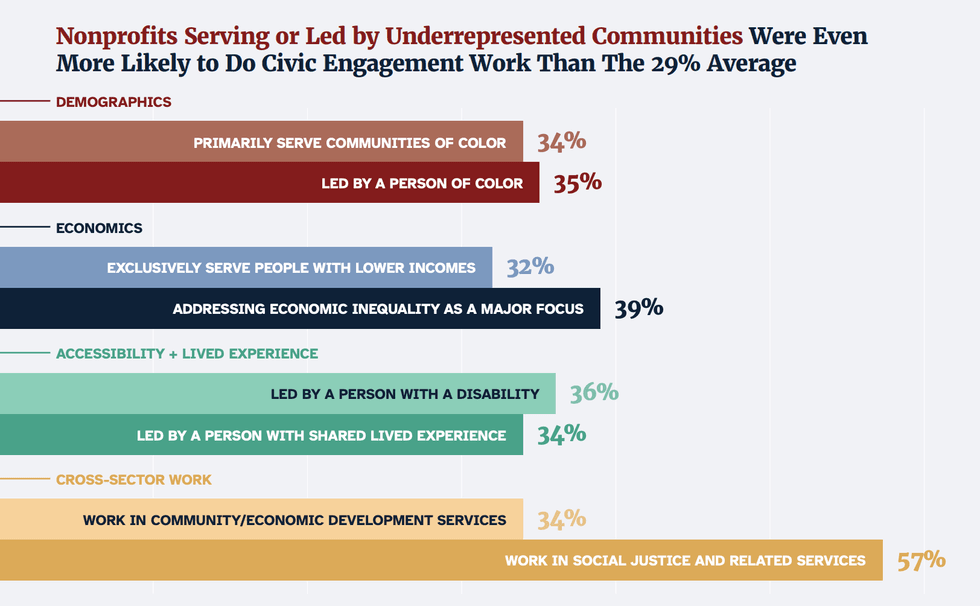

"On the Frontlines of Democracy" by Nonprofit Vote,

"On the Frontlines of Democracy" by Nonprofit Vote,

Trump & Hegseth gave Mark Kelly a huge 2028 gift