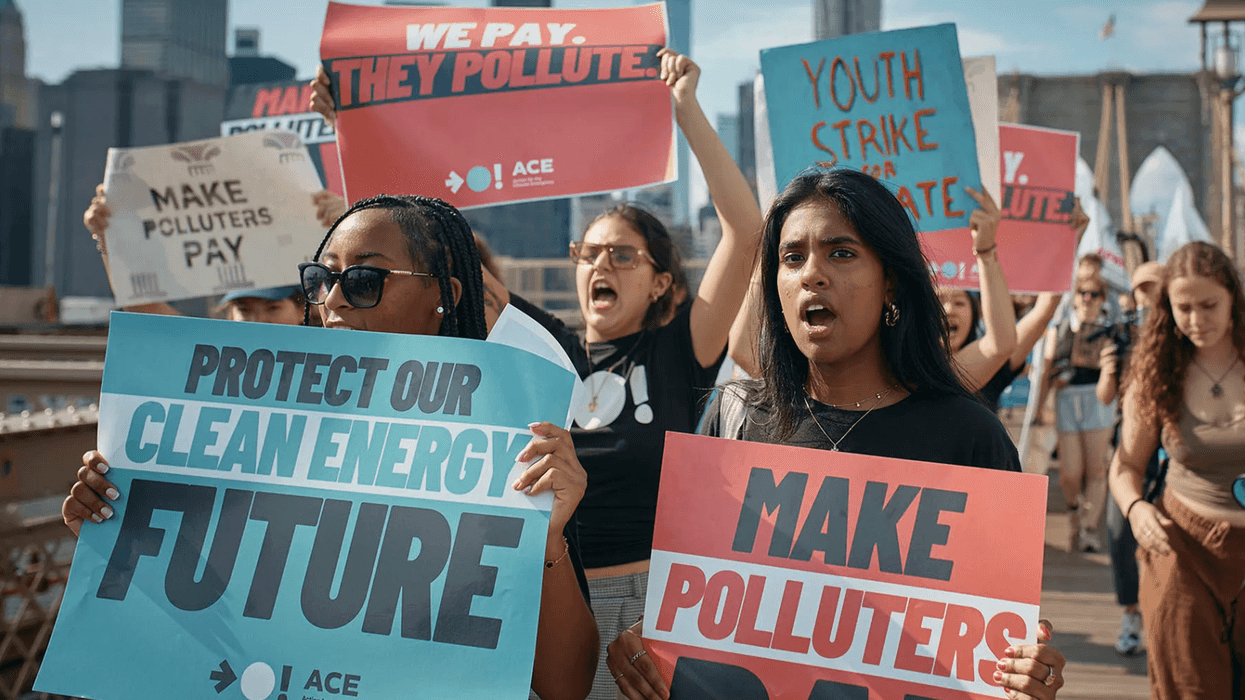

Last month, Matthew and Maria Raine testified before Congress, describing how their 16-year-old son confided suicidal thoughts to AI chatbots, only to be met with validation, encouragement, and even help drafting a suicide note. The Raines are among multiple families who have recently filed lawsuits alleging that AI chatbots were responsible for their children’s suicides. Their deaths, now at the center of lawsuits against AI companies, underscore a similar argument playing out in federal courts: artificial intelligence is no longer an abstraction of the future; it is already shaping life and death.

And these teens are not outliers. According to Common Sense Media, a nonprofit dedicated to improving the lives of kids and families, 72 percent of teenagers report using AI companions, often relying on them for emotional support. This dependence is developing far ahead of any emerging national safety standard.

Notwithstanding the urgency, Congress has responded with paralysis, punctuated only by periodic attempts to stop others from acting. Senate Commerce Chair Ted Cruz insists that a ten-year federal moratorium blocking states and cities from passing their own AI laws is “not at all dead,” despite bipartisan opposition that kept it out of the summer budget bill. He has now doubled down with the SANDBOX Act, which would let AI companies sidestep existing protections by certifying the safety of their own systems and winning renewable waivers from agency oversight. Meanwhile, the Trump administration’s “AI Action Plan” rolls back Biden-era safety standards, threatens states with punishment for regulating, and promises to “unleash innovation” by removing so-called red tape.

This reflects a familiar but flawed assumption: that innovation and safety are fundamentally at odds, and that America must choose between technological leadership and responsible oversight. The idea that deregulation is the path to leadership fundamentally misunderstands American history—not to mention the law.

Far from stifling growth, regulations have turned legal uncertainty into public confidence, and confidence into robust industries. The railroad industry did not flourish because the government stayed out of the way. It flourished because congressionally mandated standards, such as block signaling and uniform track gauges, restored public trust after deadly collisions. The result reshaped America’s conception of itself, and railroads became the sinews of American economic dominance. Similarly, aviation did not become central to American power until Congress established the Federal Aviation Administration to regulate safety, unify air traffic control, and manage the national airspace system. Pharmaceuticals did not become a global industry until drug safety regulations gave consumers confidence in the products they were prescribed.

When Congress stalls, power moves elsewhere. Already, courts are left to improvise on fundamental questions such as whether AI companies bear liability when chatbots encourage suicide, whether training on copyrighted works is theft or fair use, and whether automated hiring systems violate civil rights laws. Federal judges are making rules without guidance. If this continues, the result will be a patchwork of contradictory precedents that destabilize both markets and public trust.

The internet age serves as a cautionary tale: when Congress chose “light-touch” regulation in the 1990s, courts issued contradictory rulings, forcing lawmakers to scramble. Their fix—Section 230 of the Communications Decency Act, the “twenty-six words that created the internet”—prevented courts from holding platforms liable and, over time, was interpreted so broadly by some courts that even its co-author noted the law had become misunderstood as a free pass for illegal behavior.

In addition to courts, state legislatures are filling this vacuum. About a dozen or so bills have been introduced in states across the country to regulate AI chatbots. Illinois and Utah have banned AI therapy (bots that provide therapy services), and California has two bills winding their way through the state legislature that would mandate safeguards. But piecemeal lawsuits and a smattering of state laws are not enough. Americans need and deserve more fundamental protections.

Congress should empower a dedicated commission to set enforceable safety standards, establish the scope of legal liability for developers, and mandate transparency for high-risk applications. Courts are built to remedy past harms; Congress is built to prevent future ones by creating agencies with the technical expertise to set safety standards on highly complex and evolving technologies before disasters strike.

Bipartisan momentum around federal coordination is already growing. Senators Elizabeth Warren and Lindsey Graham have introduced legislation to create a new Digital Consumer Protection Commission with authority over tech platforms. Representatives Ted Lieu, Anna Eshoo, and Ken Buck have proposed a 20-member national AI commission to develop regulatory frameworks. Senators Richard Blumenthal and Josh Hawley announced a bipartisan framework calling for independent oversight of AI. Even senators who hold views as varied as Gary Peters and Thom Tillis agree on the need for federal AI governance standards. When progressive Democrats and conservative Republicans find common ground on AI regulation, the time is ripe for action.

To be clear, this is not about choking innovation. It is about ensuring AI does not collapse under the weight of public backlash, market confusion, and preventable harms. Regulation is what stabilizes innovation. America doesn’t lead the world by racing recklessly ahead. We lead when we set the rules of the road: rules that give innovators clarity, give the public confidence, and give democracy control over technologies that already touch life and death.

The parents who testified before Congress are right: their children’s deaths were avoidable. The question is whether lawmakers will act now to prevent more avoidable tragedies, or whether they will continue to abdicate their constitutional responsibility, leaving courts, corporations, and grieving families to pick up the pieces.

Aya Saed is an attorney and a leading voice in responsible AI legislation and the former counsel and legislative director for U.S. Representative Alexandria Ocasio-Cortez. She is the director of AI policy and strategy at Scope3 and the policy co-chair for the Green Software Foundation. She is a Public Voices Fellow with The OpEd Project in Partnership with the PD Soros Fellowship for New Americans.