“[I]t is a massively more powerful and scary thing than I knew about.” That’s how Adam Raine’s dad characterized ChatGPT when he reviewed his son’s conversations with the AI tool. Adam tragically died by suicide. His parents are now suing OpenAI and Sam Altman, the company’s CEO, based on allegations that the tool contributed to his death.

This tragic story has rightfully caused a push for tech companies to institute changes and for lawmakers to institute sweeping regulations. While both of those strategies have some merit, computer code and AI-related laws will not address the underlying issue: our kids need guidance from their parents, educators, and mentors about how and when to use AI.

I don’t have kids. I’m fortunate to be an uncle to two kiddos and to be involved in the lives of my friends’ youngsters. However, I do have first-hand experience with childhood depression and anorexia. Although that was in the pre-social media days and well before the time of GPTs, I’m confident that what saved me then will go a long way toward helping kids today avoid or navigate the negative side effects that can result from excessive use of AI companions.

Kids increasingly have access to AI tools that mirror key human characteristics. The models seemingly listen, empathize, joke, and, at times, bully, coerce, and manipulate. It’s these latter attributes that have led to horrendous and unacceptable outcomes. As AI becomes more commonly available and ever more sophisticated, the ease with which users of all ages may come to rely on AI for sensitive matters will only increase.

Major AI labs are aware of these concerns. Following the tragic loss of Raine, OpenAI has announced several changes to its products and processes to more quickly identify and address users seemingly in need of additional support. Notably, these interventions come with a cost. Altman made clear that the prioritization of teen safety would necessarily involve reduced privacy. The company plans to track user behavior to estimate their age. If a user is flagged as a minor, they will be subject to various checks on how they use the product, including limitations on late-night use, notification of family or emergency services in the wake of messages suggestive of immediate self-harm, and limitations on the responses they will receive when the model is prompted on sexual or self-harm topics.

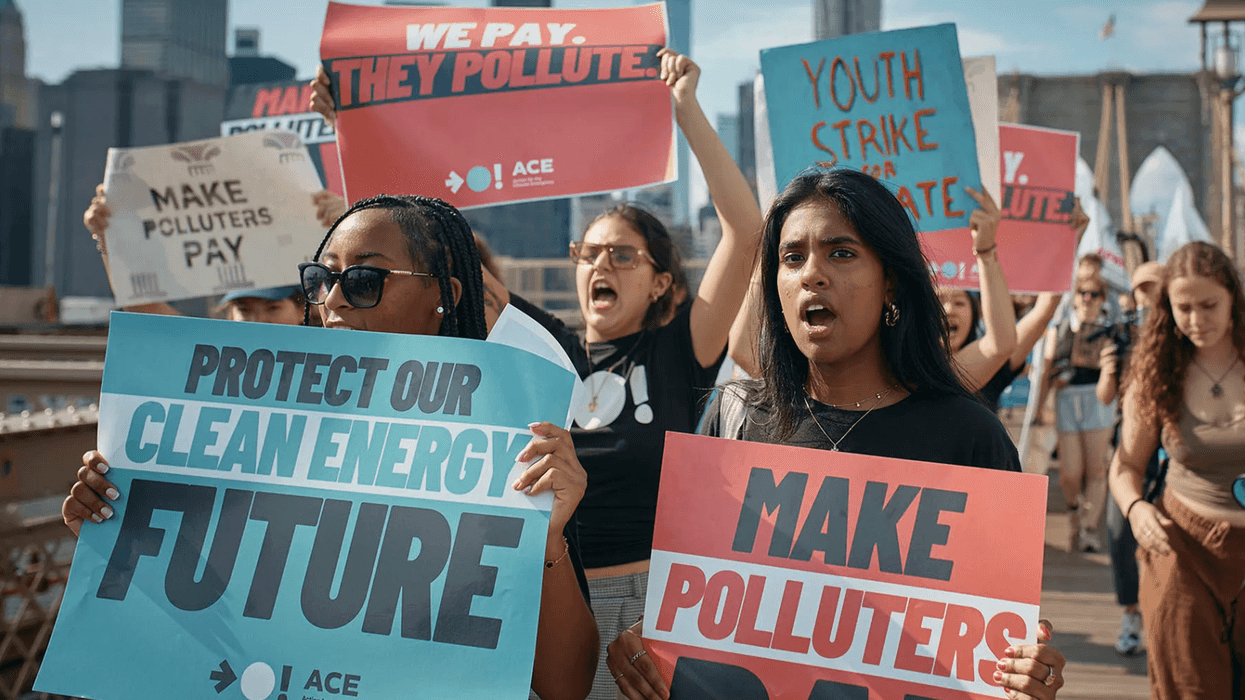

Legislators, too, are tracking this emerging risk to teen well-being. California is poised to pass AB 1064, a bill imposing manifold requirements on all operators of AI companions. Among several other requirements, this bill would direct operators to prioritize factually accurate answers to prompts over the users’ beliefs or preferences. It would also prevent operators from deploying AI companions with a foreseeable risk of encouraging troubling behavior, such as disordered eating. These mandates, which sound somewhat feasible and defensible on paper, may have unintended consequences in practice.

Consider, for example, whether operators worried about encouraging disordered eating among teens will ask all users to regularly certify whether they have had concerns about their weight or diet in the last week. These and other invasive questions may shield operators from liability but carry a grave risk of exacerbating a user’s mental well-being. Speaking from experience, reminders of your condition can often make things much worse—sending you further down a cycle of self-doubt.

The upshot is that technical solutions or legal interventions will not ultimately be the thing that helps our kids make full use of the numerous benefits of AI while also steering clear of its worst traits. It’s time to normalize a new “talk.” Just as parents and trusted mentors have long played a critical role in steering their kids through the sensitive topic of sex, they can serve as an important source of information on the responsible use of AI tools.

Kids need to have someone in their lives they can openly share their AI questions with. They need to be able to disclose troubling chats to someone without fear of being shamed or punished. They need to have a reliable and knowledgeable source of information on how and why AI works. Absent this sort of AI mentorship, we are effectively putting our kids into the driver’s seat of the most powerful technological tool without even having taken a written exam on the rules of the road.

My niece and nephew are well short of the age of needing the “AI talk.” If asked to give it, I’d be happy to do so. I spend my waking hours researching AI, talking to AI experts, and studying related areas of the law. I’m ready and willing to serve as their AI go-to.

We—educators, legislators, and AI companies—need to help other parents and mentors prepare for a similar conversation. This doesn’t mean training parents to become AI savants, but it does mean assisting parents find courses and resources that are accessible and accurate. From basic FAQs that walk parents through the “AI talk” to community events that invite parents to come learn about AI, there’s tried-and-true strategies to ready parents for this pivotal and ongoing conversation.

Parents surely don’t need another thing added to their extensive and burdensome responsibilities, but this is a talk we cannot avoid. The AI labs are steered more by profit than child well-being. Lawmakers are not well-known for crafting nuanced tech policy. We cannot count exclusively on tech fixes and new laws to tackle the social and cultural ramifications of AI use. This is one of those things that can and must involve family and community discourse.

Love, support, and, to be honest, distractions from my parents, my coaches, and friends were the biggest boost to my own recovery. And while we should surely hold AI labs accountable and spur our lawmakers to impose sensible regulations, we should also develop the AI literacy required to help our youngsters learn the pros and cons of AI tools.

Kevin Frazier is an AI Innovation and Law Fellow at Texas Law and Author of the Appleseed AI substack.