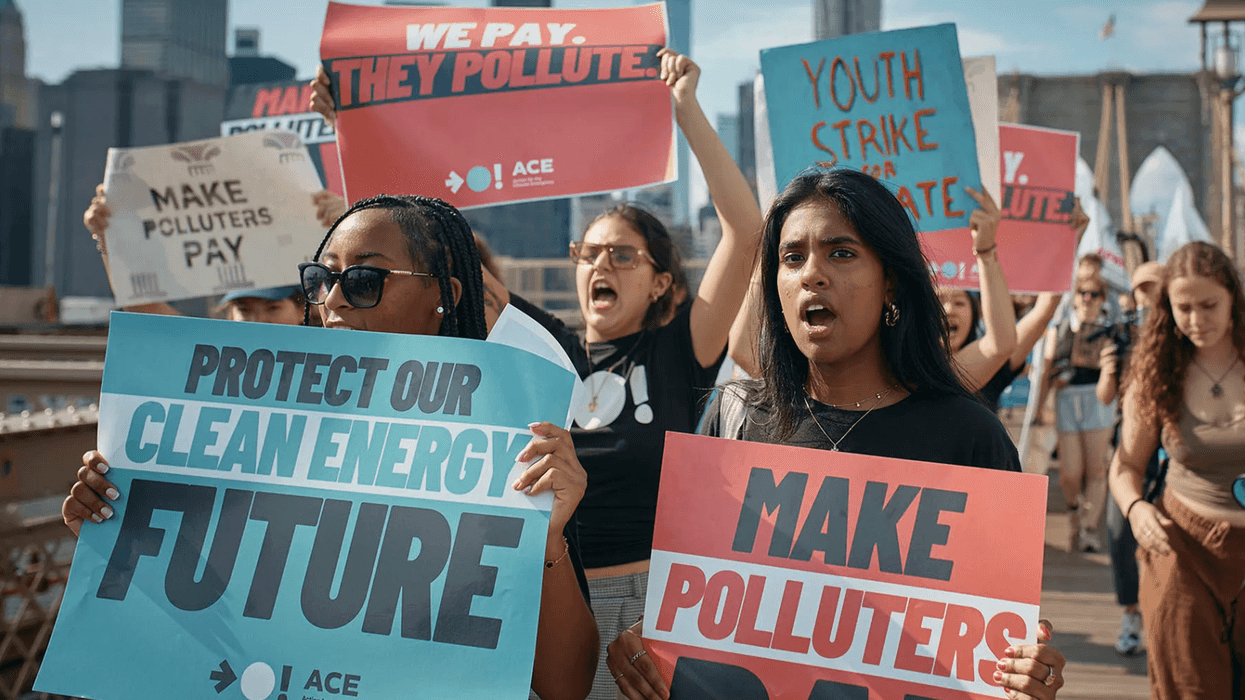

In the last election cycle, Facebook and Twitter came under heavy criticism because they were used to spread misinformation and disinformation. But as those platforms have matured and others have surged to the forefront, researchers are now examining the negative influence of the newer players. Like TikTok.

The platform, which allows users to create and share short videos, has become tremendously popular, particularly among teens and young adults. It was the second most downloaded app during the first quarter of 2022, according to Forbes, and it has become the second most popular social media platform among teens this year, per the Pew Research Center.

And because TikTok is also eating into a big chunk of Google’s search dominance, it has become a significant source of misinformation.

Earlier this month, researchers at NewsGuard sampled TikTok search results on a variety of topics, covering the 2020 presidential election, the midterm elections, Covid-19, abortion and school shootings. They found that nearly 20 percent of the results demonstrated misinformation.

Emphasis theirs:

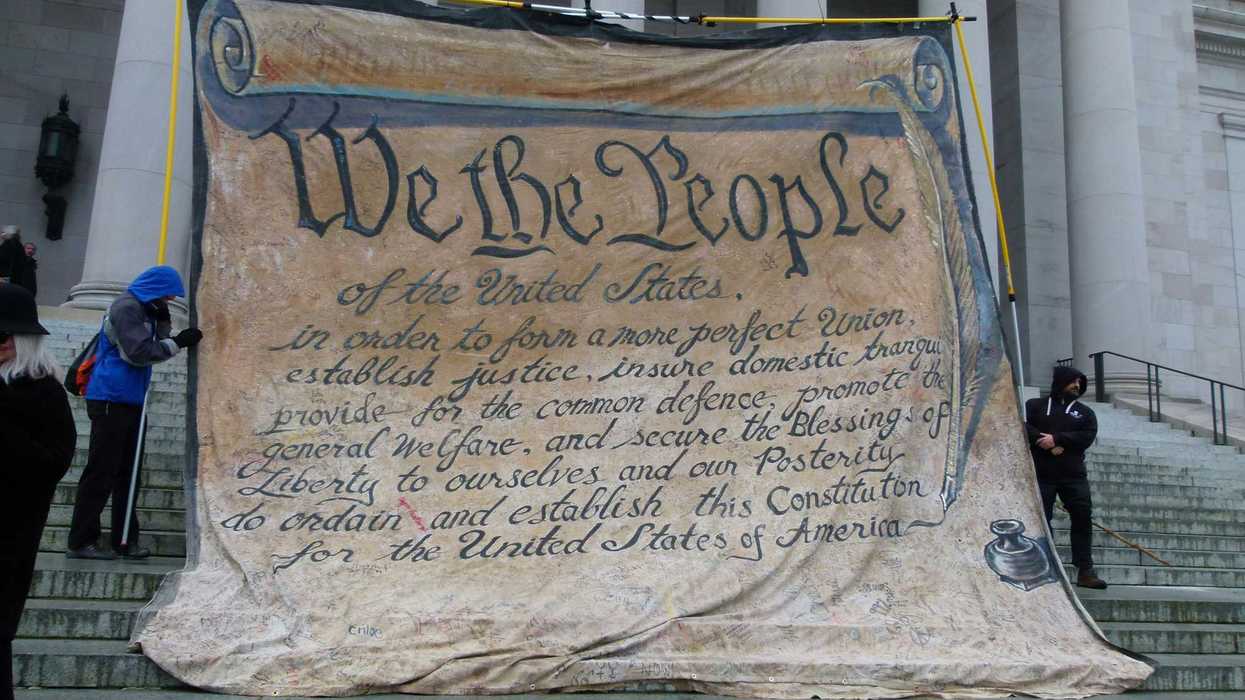

For example, the first result in a search for the phrase “Was the 2020 election stolen?” was a July 2022 video with the text “The Election Was Stolen!” The narrator stated that the “2020 election was overturned. President Trump should get the next two years and he should also be able to run for the next four years. Since he won the election, he deserves it.” (Election officials in all 50 states have affirmed the integrity of the election, and top officials in the Trump administration have dismissed claims of widespread fraud.)

Of the first 20 videos in the search results, six contained misinformation (if not disinformation), including one that used a QAnon hashtag. The same search on Google did not result in web pages promoting misinformation.

Similarly, a search for “January 6 FBI” on TikTok returned eight videos containing misinformation among the top 20, including the top result. Again, Google did not have any misinformation in the top 20.

While Google will search the entire internet – from government websites to news to videos to recipes – a TikTok search will only return videos uploaded to the platform by its users.

TikTok does have a content moderation system and states in its guidelines that misinformation is not accepted. But users appear to have found ways around the AI system that serves as the first line of defense against misinformation.

“There is endless variety, and efforts to evade content moderation (as indicated in [NewsGuard’s] report) will always stay several steps ahead of the efforts by the platform,” said Cameron Hickey, project director for algorithmic transparency at the National Conference on Citizenship, when asked whether there is anything the platforms can do to prevent misinformation from surfacing in search results. “That doesn’t mean the answer is always no, but it means that concrete investment in both understanding what misinformation is out there, how people talk about it, and effectively judging both the validity and danger are a significant undertaking.”

While advocates encourage social media platforms to step up their anti-misiniformation efforts, there are other steps that can be taken at the user end, particularly by stepping up education about identifying falsehoods.

“Users on social media need greater media literacy skills in general, but a key focus should be on understanding why messages stick,” said Hickey.

He pointed to three reasons people latch onto misinformation:

- Motivated reasoning: People want to find contentpeople statement that aligns with their beliefs and values.

- Emotional appeals: Media consumers need to pause when they have an emotional response to some information and evaluate the cause of the reaction.

- Easy answers: Be wary of any information that seems too good to be true.