Daniel O. Jamison is a retired attorney.

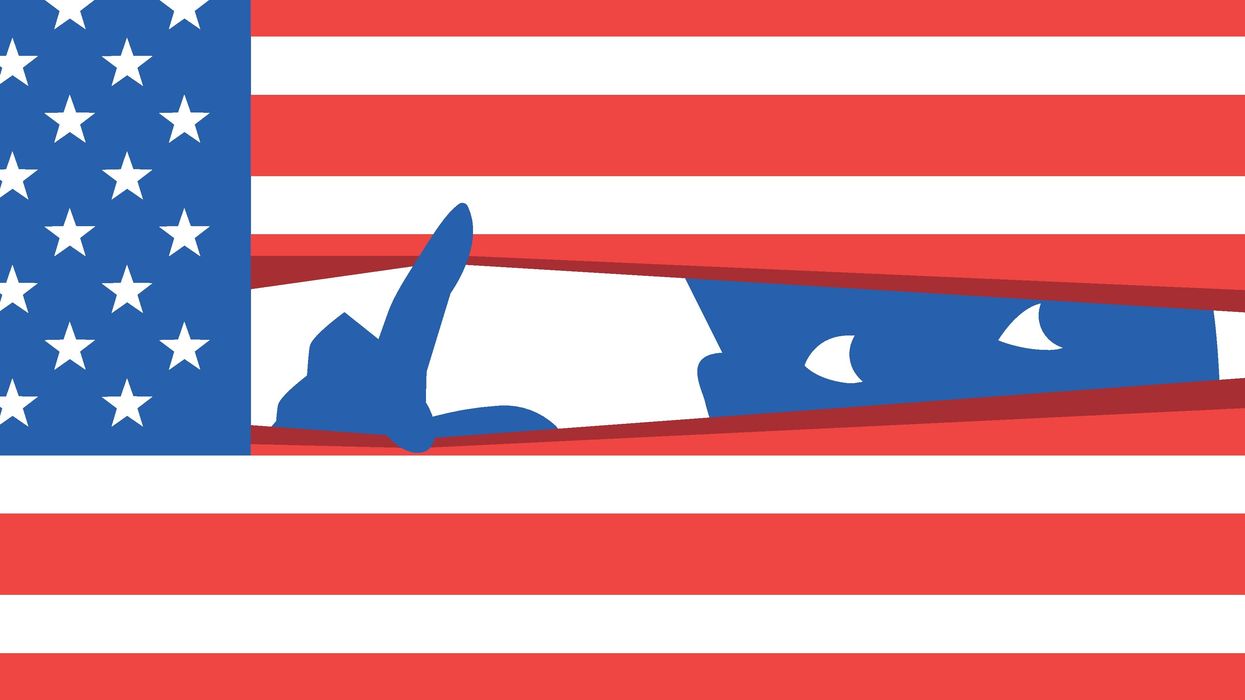

In his classic novel “1984,” George Orwell described a frightening dystopian future where individual freedom is lost as technology enables “Big Brother” to watch, monitor and direct everyone. Big Brother has now come to American colleges and universities.

College students often must log into course management programs to take classes. These systems gather information for the professor on how much time is spent looking at readings and whether course messages have been checked. Professors can insist that students read course books online, which allows the professor to check if pages were actually read, when they were read and how much time was spent on each page.

Professors can monitor how long one takes to complete an assignment and quizzes. Website visits, keystrokes, link clicks, and mouse movements are recorded. Professors can insist on activating one’s camera so that test-taking can be proctored through facial recognition. Never mind that algorithms can flag certain innocent movements of the test-taker as suspicious or not allow a Black person’s face to register.

Card swipes allow tracking of one’s use of the library. Then there are the cameras in the parking areas that pick up not only your license plate to see if you have a parking pass but also see who you are riding with and what you are carrying when you leave the car. Better hide what you don’t want Big Brother to see. If you have not been to the food commons for several days, someone may give you the creeps by showing up at your dorm room to check on you.

At least one presumably can still avoid taking a course that requires reading “War and Peace” in two weeks. Course papers used to often be written in late- or all-night cram sessions. One learned how well they can perform under pressure.

Now one presumably could be flagged as a potential miscreant who – instead of spending days cooped up writing a paper – socializes, plays intramural sports, watches sports, sees and attends what else is happening on campus or in the area, and learns the ways and ideas of fellow students.

Early diversity programs of the 1970s allowed students of diverse races, ethnicities, and backgrounds to learn about and from each other. There is and was immense value in bringing together people who would otherwise have little or no contact so that they might get to know and appreciate one another.

This is not to say that students should neglect their studies, but how can learning take place if they are so worried about being tracked? They may become hermits and lose the forest for the trees amid mind-numbing constant studying. Big Brother’s fear-inducing monitoring would appear to make it harder for people to get to know one another. A college education should include learning through in-person social interaction and working together to rise above anger against one another or retreating to “safe spaces.”

This massive data collection is very disturbing beyond the world of academia. What data has been gathered from visits to health websites? Artificial intelligence presumably can overcome the sheer volume of data to drill down on someone. Autonomy is lost. Adolf Hitler would have been delighted with the assistance of all of this data to rid Nazi Germany of those viewed as “vermin,” not pure of blood or race, or otherwise undesirable. Don’t think it can’t happen here.

The root of the problem is the massive and willy-nilly unwarranted collection of all of this data in the first place. According to Tara Garcia Mathewson, a University of California, San Diego chief privacy officer has stated: “We haven’t had regulator scrutiny, to a great extent, on our privacy practices or our data practices, so our data really do live all over the place, and no one quite knows who has what.”

Except for some broader protections for the disabled and personal health information, federal law and regulations appear for the most part not to address the root problem.

The definition of “education records” in federal post-secondary student privacy law and regulations appears to include much of this massive data, but the regulations do not address the unnecessary massive collection of data in the first place. They appear to address only such topics as who can access “education records” without a student’s consent, a student’s right to see and seek to amend the records (the school is not required to make the amendment), a student’s right to file a complaint with the Department of Education, and the school’s obligation to notify students of these rights.

“Transparency” is no solution. It only lets you know what Big Brother has on you. Big Brother already has the data and can use it, plus hacking is always a concern. One can ask professors to accept typed work outside these systems, but the school or professors may not allow it. It is otherwise apparently a practical impossibility to entirely avoid being monitored.

Government may not have addressed the root of the problem, but schools can on their own. Higher education should drop the coursework management systems and limit electronic school communication to emails. Our troubled times seem to require surveillance cameras and key card entry systems, but they should be narrowly tailored to documented needs.

These changes made, “1984” should be required reading for students and administrators.

Trump & Hegseth gave Mark Kelly a huge 2028 gift