Kevin Frazier will join the Crump College of Law at St. Thomas University as an Assistant Professor starting this Fall. He currently is a clerk on the Montana Supreme Court.

Bad weather rarely causes a plane to crash — but the low probability of such a crash isn’t because nature lacks the power to send a plane woefully off course. In fact, as recently as 2009, a thunderstorm caused a crash resulting in 228 deaths.

Instead, two main factors explain why bad weather no longer poses an imminent threat to your longevity: first, we’ve improved our ability to detect storms. And, second and most importantly, we’ve acknowledged that the risks of flying through such storms isn’t worth it. The upshot is that when you don’t know where you’re going and if your plane can get you there, you should either stop or, if possible, postpone the trip until the path is in sight and the plane is flight worthy.

The leaders of AI look a lot like pilots flying through a thunderstorm — they can’t see where they’re headed and they’re unsure of the adequacy of their planes. Before a crash, we need to steer AI development out of the storm and onto a course where everyone, including the general public, can safely and clearly track its progress.

Despite everyone from Sam Altman, the CEO of OpenAI, to Rishi Sunak, the Prime Minister of the UK, acknowledging the existential risksposed by AI, some AI optimists are ignoring the warning lights and pushing for continued development. Take Reid Hoffman for example. Hoffman, the co-founder of LinkedIn, has been "engaged in an aggressive thought-leadership regimen to extol the virtues of A.I” in recent months in an attempt to push back against those raising redflags, according to The New York Times.

Hoffman and others are engaging in AI both-side-ism, arguing that though AI development may cause some harm, it will also create societally beneficial outcomes.The problem is that such an approach doesn’t weigh the magnitude of those goods and evils. And, according to individuals as tech savvy as Prime Minister Sunak, those evils may be quite severe. In other words, the good and bad of AI is not an apples-to-apples comparison -- it’s more akin to an apples to obliterated oranges situation (the latter referring to the catastrophic outcomes AI may lead to).

No one doubts that AI development in “clear skies” could bring about tremendous good.For instance, it’s delightful to think of a world in which AI replaces dangerous jobs and generates sufficient wealth to fund a universal basic income.The reality is that storm clouds have already gathered.The path to any sort of AI utopia is not only unclear but, more likely, unavailable.

Rather than keep AI development in the air during such conditions, we need to issue a sort of ground stop and test how well different AI tools can navigate the chaotic political, cultural, and economic conditions that define the modern era. This isn’t a call for a moratorium on AI development -- that’s already been called for (and ignored). Rather, it’s a call for test flights.

“Model evaluation” is the AI equivalent of such test flights. The good news is researchers such as Toby Shevlane and others have outlined specific ways for AI developers to use such evaluations to identify dangerous capabilities and measure the probability of AI tools to cause harm in application. Shevlane calls on AI developers to run these "test flights", to share their results with external researchers, and to have those results reviewed by an independent, external auditor to assess the safety of deploying an AI tool.

Test flights allow a handful of risk-loving people to try potentially dangerous technology in a controlled setting. Consider that back in 2010 one of Boeing's test flights of its 787 Dreamliner resulted in an onboard fire. Only after detecting and fixing such glitches did the plane become available for commercial use.

There’s a reason we only get on planes that have been tested and that have a fixed destination. We need to mandate test flights for AI development. We also need to determine where we expect AI to take us as a society. AI leaders may claim that it's on Congress to require such testing and planning, but the reality is that those leaders could and should self-impose such requirements.

The Wright Brothers did not force members of the public to test their planes — nor should AI developers.

Avoiding disaster by mandating AI testing

Getty Images

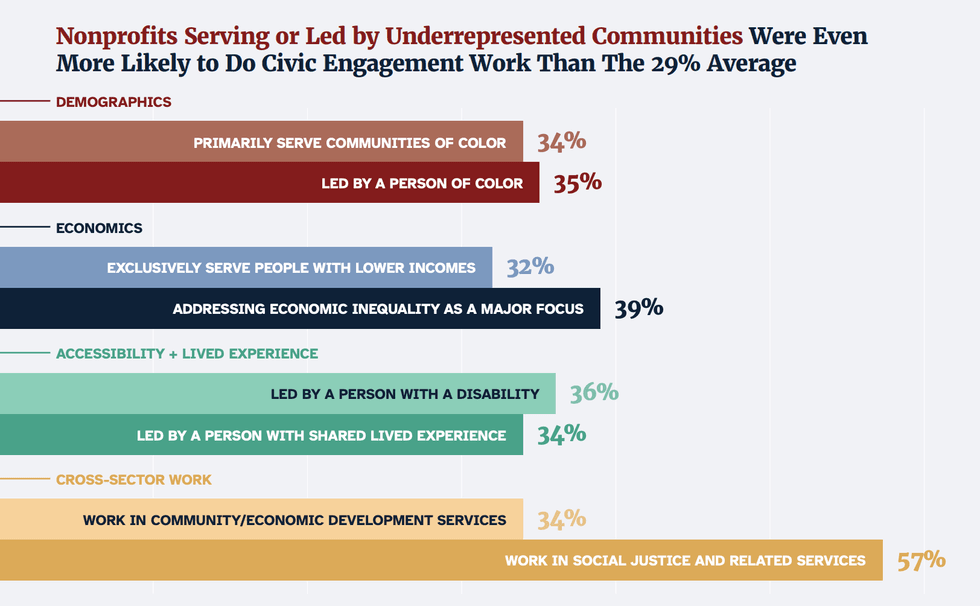

"On the Frontlines of Democracy" by Nonprofit Vote,

"On the Frontlines of Democracy" by Nonprofit Vote,

Trump & Hegseth gave Mark Kelly a huge 2028 gift