The spread of false and misleading information, whether intentional or not, is one of the most consequential issues in America and around the world. And this "information disorder" crisis exacerbates all other issues, from democracy to climate change, from health care to racial justice.

To explore this multipronged issue, the Aspen Institute brought together a group of experts from government, academia, philanthropy and civil society. Following six months of collaboration and research, the 16-person Commission on Information Disorder detailed its findings and recommendations in an 80-page report released Monday.

The report aims to call attention to an urgent issue and provide guidelines for how decision-makers can take immediate action to reduce the impacts of mis- and disinformation.

Because information disorder affects so many other issues, the Aspen Institute sought to have its commission be as diverse and wide-ranging as possible. The commission was led by journalist Katie Couric, Color of Change President Rashad Robinson and Chris Krebs, former director of the Cybersecurity and Infrastructure Security Agency.

Recognizing that the eradication of misinformation is an impossible task, the commission instead focused its report on three main priorities: increasing transparency and understanding, building trust, and reducing harms.

"Disinformation is a symptom to the disease of the complex structural inequalities that have plagued society," Robinson said during a webinar presenting the report. "And it's a tactic used to take advantage of things that are already broken and sometimes currently being broken in our society: racial bias, gender inequality, economic inequality, the decline in journalism and so much more."

One reason mis- and disinformation continue to run rampant in the United States is the lack of clear leadership and strategy from public and private entities, the report says. The federal government has been "ill-equipped and outpaced" by new technologies, while social media and tech companies often abuse users' trust and hide important data.

"I think it's incumbent upon any administration — the prior, the current or any future administrations — to be thinking about information disorder, and disinformation specifically, strategically," Krebs said during the webinar.

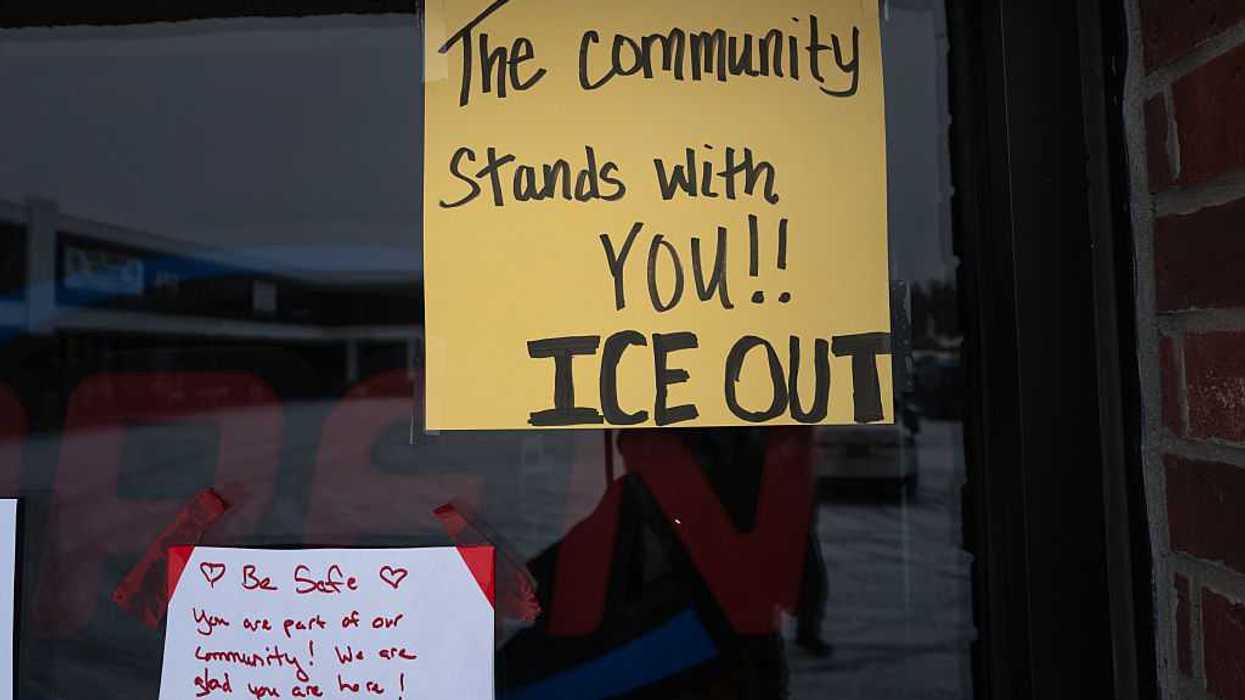

Additionally, news media plays an important role in providing the public with factual information. However, local news outlets — which people tend to trust most — continue to shut down due to financial constraints. Between 2004 and 2018, more than 2,000 newspapers have shuttered, leaving 65 million Americans in so-called "news deserts," according to a report by the University of North Carolina. Without access to credible information sources, disinformation and hyperpartisan messaging tend to take its place.

"Local news is such an important part of a well-informed electorate," Couric said during the webinar. "If people don't know what's going on in their community, they are less likely to be involved in the democratic process, in local elections. They're less likely to have an appetite and curiosity about what their government officials are doing, right or wrong."

To counter mis- and disinformation and achieve a better information environment, the commission outlined actionable items across its three main areas of focus.

To increase transparency and understanding, the commission recommends:

- Implementing protections for researchers and journalists who violate platform terms of service by responsibly conducting research on public data of civic interest.

- Requiring platforms to disclose certain categories of private data to qualified academic researchers, so long as that research respects user privacy, does not endanger platform integrity and remains in the public interest.

- Creating a legal requirement for all social media platforms to regularly publish the content, source accounts, reach and impression data for posts that they organically deliver to large audiences.

- Requiring social media platforms to disclose information about their content moderation policies and practices, and produce a time-limited archive of moderated content in a standardized format, available to authorized researchers.

- Requiring social media companies to regularly disclose, in a standardized format, key information about every digital ad and paid post that runs on their platforms.

To build trust, the commission recommends:

- Endorsing efforts that focus on exposing how historical and current imbalances of power, access and equity are manufactured and propagated with mis- and disinformation — and on promoting community-led solutions to forging social bonds.

- Developing and scaling communication tools, networks and platforms that are designed to bridge divides, build empathy and strengthen trust among communities.

- Increasing investment and transparency to further diversity at social media companies and news media as a means to mitigate misinformation arising from uninformed and disconnected centers of power.

- Promoting substantial, long-term investments in local journalism that informs and empowers citizens, especially in underserved and marginalized communities.

- Promoting new norms that create personal and professional consequences within communities and networks for individuals who willfully violate the public trust and use their privilege to harm the public.

- Improving U.S. election security and restoring voter confidence with improved education, transparency and resiliency.

And to reduce harms, the commission recommends:

- Establishing a comprehensive strategic approach to countering mis- and disinformation, including a centralized national response strategy, clearly defined roles and responsibilities across the executive branch, and identified gaps in authorities and capabilities.

- Creating an independent organization with a mandate to develop systemic misinformation countermeasures through education, research and investment in local institutions.

- Investing and innovating in online education and platform product features to increase users' awareness of and resilience to online misinformation.

- Holding superspreaders of mis- and disinformation to account with clear, transparent and consistently applied policies that enable quicker, more decisive actions and penalties, commensurate with their impacts — regardless of location, political views or role in society.

- Amending Section 230 of the Communications Decency Act to 1) withdraw platform immunity for content that is promoted through paid advertising and post promotion and 2) remove immunity as it relates to the implementation of product features, recommendation engines and design.