Coral is vice president of technology and democracy programs and head of the Open Technology Institute at New America. She is a public voices fellow with The OpEd Project.

2023 was the year of artificial intelligence. But much of the discussion has centered around extremes – the possibility of extinction versus the opportunity to exceed human capacity. But Reshma Saujani, the founder of Girls Who Code, suggests that we don’t have to choose between ethical AI and innovative AI, and that if we focus solely on fear then that just might be the AI future we get. So how do we foster an AI future worth building?

In some ways, innovations like ChatGPT represent uncharted territory in the realm of technology. Having worked at the intersection of government and public interest technology for nearly 20 years, I know that AI is not new, and the past year’s intense focus mirrors previous digital tech waves. But I would offer that as we think about how AI evolves, there are three important lessons from the past that we should consider in order to properly harness the benefits of this technology for the public good.

The first lesson serves as a clear warning: Timelines are often detached from the technology's true readiness. Just as with autonomous vehicles and commercial Big Data initiatives, industry-set transformation timelines are often prematurely optimistic, driven by investor desires to scale. Much of this is what drives rapid deployment without the adequate social deliberation and scrutiny, thereby jeopardizing safety. We’ve seen the impacts on the road and in cities, and with AI we’re seeing the exponential growth of online nonconsensual images and deep fakes.

Second, these technologies have lacked the go-to market strategies that undercut their ability to scale. They have eventually stalled in funding and development, in part, I would argue, because they lacked a clear public value. While we can marvel at the idea of being picked up by an autonomous car or navigating a “smart city,” all of these technologies need paying customers. Government procurement cycles failed to transform cities into data-driven metropolises of the future, and AVs are too expensive for the average driver. OpenAI has only just released a business version for ChatGPT and pricing is not public. The monetization strategy of these tools are still in development.

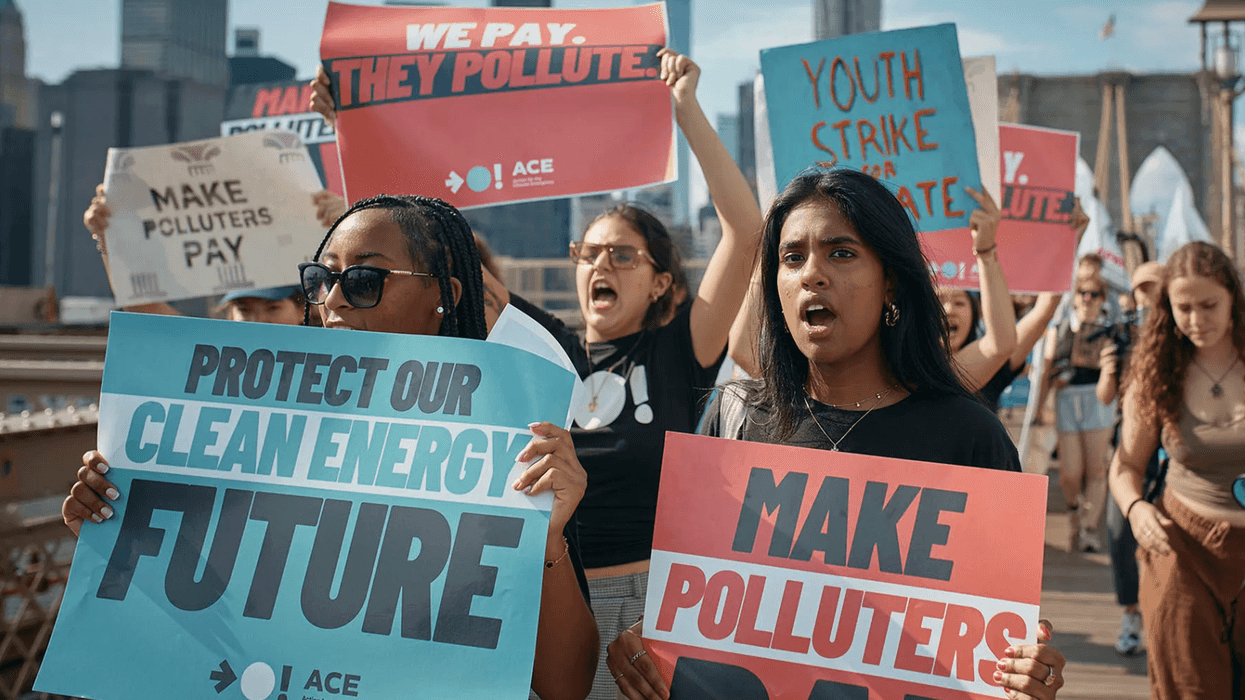

During my tenure at the Knight Foundation, we invested $5.25 million to support public engagement in cities where autonomous vehicles were deployed to understand sentiment and engage communities on their deployment. Demonstrations and community engagement were essential to addressing the public’s skepticism and sparking curiosity. What was eye-opening to me was that regardless of how complex the technology, communities could envision beneficial use cases and public value. But their vision differed from technologists and investor priorities, as in the case of autonomous delivery technologies. Bridging this gap can speed up adoption.

Lastly, widespread adoption of AI is unlikely without the proper infrastructure. A peer-reviewed analysis recently released, showed that by 2027, AI servers may use as much annual electricity as Argentina. Such a massive amount of energy will undoubtedly raise concerns regarding AIs impact on the environment, but it also calls into question our capacity to meet the moment. Additionally, AI requires fast internet. The United States has only just begun to roll out $42.5 billion in funding to expand high-speed internet access so that we can finally close the digital divide. If we care about equity, we must ensure that everyone has access to the fast internet they need to benefit from AI.

To be sure, every tech advance has differences, so we cannot fully expect to use historical tech advances, like Smart Cities or autonomous vehicles, to predict how AI will evolve. But looking to history is important, because it often repeats itself, and many of the issues encountered by former technologies will come into play with AI, too.

To scale AI responsibly, fast, affordable internet is crucial but almost 20 percent of Americans are currently left out. Congress can take action by renewing programs for affordable internet access and ensuring Bipartisan Infrastructure Law investments align with an AI future. The public value of AI can be enhanced by not relying solely on investor interests. While most Americans are aware of ChatGPT, only one in five have actually used it. We need proactive engagement from all stakeholders – including governments, civil society and private enterprises – to shape the AI future in ways that bring tangible benefits to all. True public engagement, especially from marginalized communities, will be key to ensuring that the full extent of unintended consequences is explored. No group can speak to the impact of AI on a particular selection of people better than the impacted individuals, and we have to get better at engaging on the ground.

Some of the greatest value of AI lies in applications and services that can augment skills, productivity and innovation for the public good. Not only digital access, but also digital readiness, is essential to harness these benefits. Congress can mandate federal agencies invest in initiatives supporting digital readiness, particularly for youth, workers and those with accessibility challenges.

But there is no need to rush.

By taking a cue from historical tech advances, like Smart Cities and autonomous vehicles, we can usher in an AI revolution that evolves equitably and sets a precedent for technological progress done right. Only then can we truly unlock the transformative power of AI and create a brighter, more inclusive future for all.