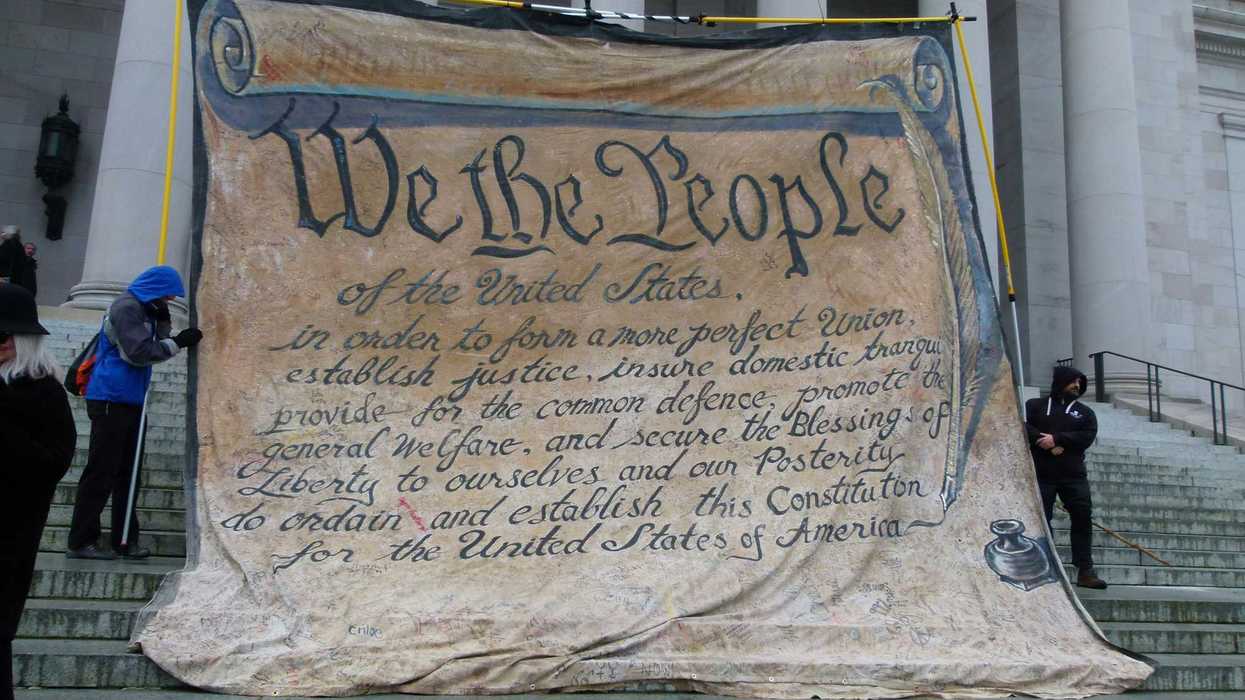

Outdated, albeit well-intentioned data privacy laws create the risk that many Americans will miss out on proven ways in which AI can improve their quality of life. Thanks to advances in AI, we possess incredible opportunities to use our personal information to aid the development of new tools that can lead to better health care, education, and economic advancement. Yet, HIPAA (the Health Information Portability and Accountability Act), FERPA (The Family Educational Rights and Privacy Act), and a smattering of other state and federal laws complicate the ability of Americans to do just that.

The result is a system that claims to protect our privacy interests while actually denying us meaningful control over our data and, by extension, our well-being in the Digital Age.

The promise of AI-powered tools—from personalized health monitoring to adaptive educational support—depends on access to quality data. But our current legal framework, built on distrust of both corporations and skepticism of individual judgment, creates friction to the exchanges that could benefit us most.

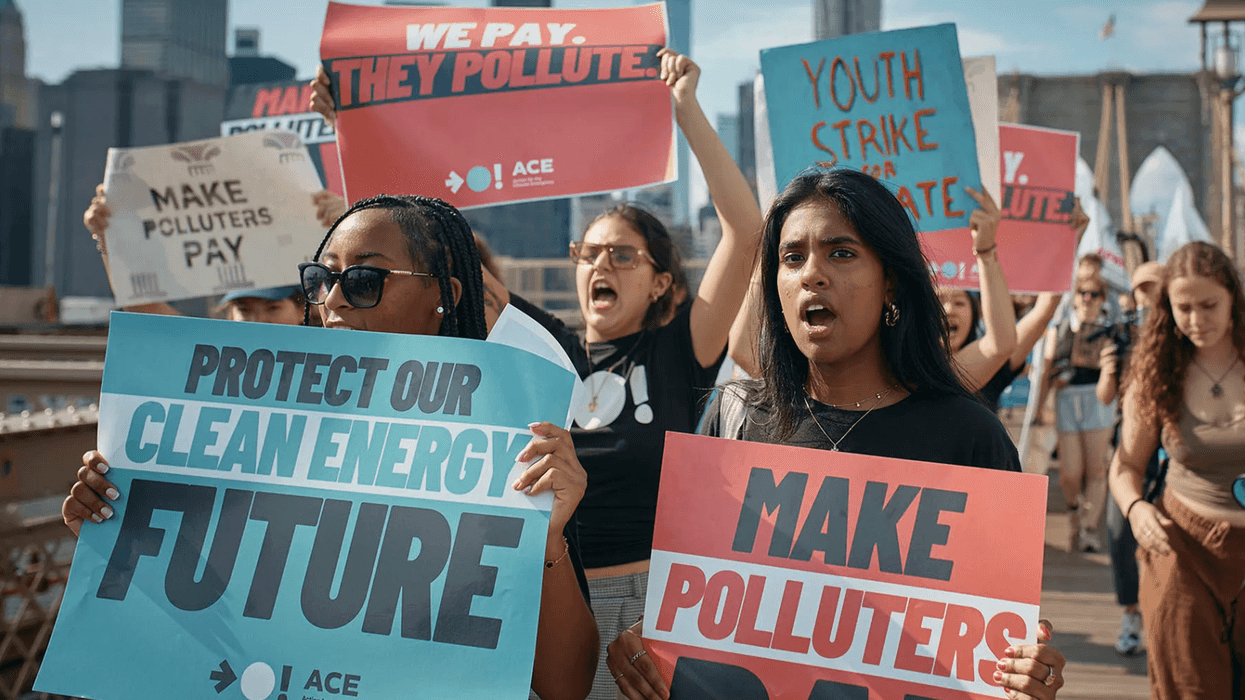

This isn't just a technical problem. It's creating what I call an “AI abyss”—think of it as a subset of the larger Digital Divide—a widening gap between those who can navigate privacy restrictions to access cutting-edge AI services and those who cannot. Wealthy Americans already pay premium prices for AI-enhanced health monitoring and personalized care. Meanwhile, more than 100 million Americans lack a usual source of primary care, and privacy laws enacted decades ago make it nearly impossible for community health clinics to leverage AI tools that could transform care delivery.

Consider a hypothetical rural community, where a doctor at the local clinic proposed using AI to develop tailored health interventions based on aggregated clinic data and wearable device information. The community was enthusiastic—until a vocal lawyer claimed that state law required handwritten authorization from each patient, with explicit descriptions of every potential data use. The understaffed clinic couldn't manage the paperwork burden. The project died. Meanwhile, imagine a nearby affluent community that hires a law firm to help them create privately-operated “Health Data Clubs,” which aggregate member health data to then offer AI-driven recommendations.

This pattern repeats across domains in very real ways. Fewer than 15 percent of children in poverty who need mental health support receive care, yet developing reliable AI therapy tools requires exactly the kind of sensitive data that privacy laws most tightly restrict. During the pandemic, 20 percent of wealthy parents hired personal tutors; only 8 percent of low-income parents could afford them. Had AI tutors trained on individual learning patterns been available at that time, the technology could have narrowed that gap, but that also presumes that educational privacy laws didn't make such data sharing prohibitively complex. The unfortunate irony is that the AI applications with the greatest potential to revive and spread the American Dream are precisely those requiring the most legally protected information.

Incremental amendments to dozens of privacy statutes won't solve this problem. We need a different approach: a federal “right to share” that allows Americans to opt out of state privacy restrictions when they choose. To be clear, the aim is not to inhibit the ability of any individual to make use of as many privacy protections as they see fit. The core aim is instead to recognize that an individual’s data is more valuable than ever, which suggests that individuals should have more control over that data. Again, those who prefer current restrictions would remain fully covered. But those willing to share their data for purposes they value—whether joining health cooperatives or participating in educational research—would have that freedom.

The constitutional authority for such a right is clear. Commercial data exchange via the Internet constitutes interstate commerce, well within Congress's power to regulate. The implementation mechanism, however, may not be so straightforward. In theory, this right could operate in a manner similar to privacy notices and cookie disclosures. Americans could opt out of restrictive state laws through a simple online process, with reasonable opportunities to opt back in. How best to design and stand up this effort warrants more attention from interdisciplinary stakeholders. The Federal Trade Commission, which already enforces privacy policies, could handle complaints surrounding a failure to adhere to an individual’s expressed preferences.

Of course, no right is absolute. A right to share cannot extend to information directly implicating others' privacy—a family member's genetic data, for instance. Congress could establish narrow, content-neutral limits modeled on existing exceptions to free expression.

The deeper principle here is informational self-determination. Just as the First Amendment protects our right to speak and listen, we should have the right to disclose or withhold our own information. Both rest on the same constitutional value: personal autonomy as a safeguard of democratic life. Nearly 80 percent of Americans trust themselves to make the right decisions about their personal information. Our laws should reflect that trust rather than contradict it.Kevin Frazier is a Senior Fellow at the Abundance Institute and directs the AI Innovation and Law Program at the University of Texas School of Law